In this activity, we restore a motion blurred image with Gaussian noise using a Weiner filter and a pseudo Weiner filter.

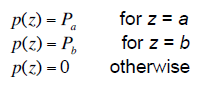

In the Fourier space, the degraded image is given by

where G is the degraded image, F is the original image, N is the noise, H is the transfer function given by

where T is the duration of exposure, a and b represents displacements along u and v respectively.

Methodology

A test image is first degraded using the above equations. Restoration follows using a Weiner filter given by

where Sn and Sf are the power spectrum of the noise and the original image respectively. However, in real world situations, it is seldom known the noise and the original image hence we use a Pseudo Weiner filter given by

where K is a constant.

Results

Below are our results for different parameter values. x-axis represents K values and y-axis are the F, C, Q values.

Figure 1. Restoration for the test image with motion blur parameters a=0.05, b=0.05 and T=1

Figure 2. Restoration for the test image with motion blur parameters a=0.05, b=0.03 and T=1

Results

Below are our results for different parameter values. x-axis represents K values and y-axis are the F, C, Q values.

Figure 1. Restoration for the test image with motion blur parameters a=0.05, b=0.05 and T=1

Figure 2. Restoration for the test image with motion blur parameters a=0.05, b=0.03 and T=1

To quantify the restored images, we used Linfoot's criterion. F represents fidelity or the general similarity of the image, C is the structural content which gives the relative sharpness of the images and Q is the correlation quality which measures the alignment of peaks. Perfect reconstruction occurs when F=C=Q=1

From figures 1 and 2, the Weiner filter shows the best restoration, followed by the Pseudo Weiner with K=0.0001. As K becomes larger, more blurring is observed. Sharp reconstruction is observed at lower K values, however noise amplification is observed. At the extreme case where K=0, restored image is unrecognizable. The direction of the lines in K=0, reflects the parameters a and b. (refer also to figure 3)

Below is our full data (click to enlarge)

Figure 3. Full restoration data.

Source of test image: http://www.armadaanime.com/poster-belldandy-field.jpg

Below is our full data (click to enlarge)

Figure 3. Full restoration data.

From figure 3, we can observed that higher T value (for our case, T=3) are restored more accurately compared to those with lower T (for our case, T=1).

For this activity, I'll give myself a 10 for completing the activity and for having satisfactory results.

Source of test image: http://www.armadaanime.com/poster-belldandy-field.jpg