In this activity, we are asked to extract information from a scanned document (figure 1) specifically handwriting. This activity is the integration of the techniques learned from the previous activities. The first task at hand is to clean the image by removing the horizontal and vertical lines present. Here we used filtering just like in activity 7. For this part only a portion of the image is used.

The image is binarized and inverted (i.e. high becomes low) since we are interested in the text hence it should have a value of 1 (i.e. it should be white).

Figure 2. Top left: Binarized image. Top right: Its FFT. Bottom left: The filter which is binarized before multiplying with the FFT. Bottom right: The filtered image.

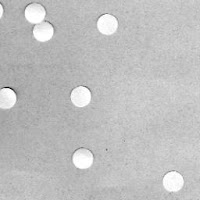

For further cleaning of unwanted white dots, morphological operations are also used from Activity 8 and 9.

Figure 3. Left: Binarized image of the filtered image. Right: Image after opening operation

After cleaning, thinning is applied to make the text one pixel thick.

Figure 4. Image after thinning operation.

The text in the final image is not recognizable indicating that the cleaning procedure is insufficient.

Finally, correlation is also used to find occurances of the the word "DESCRIPTION" utilizing the technique in activity 5.

Figure 5. Locations of the word "DESCRIPTION". (Click to enlarge.)

What's annoying...Scilab crashes in Windows Vista when mogrify is used.

For this activity, I'll give myself a 10 because I was able to use the techniques in the previous activities although I'm still not good at filtering. Finding the right filter is a hard task.